A duo at Brigham and Women’s Hospital has developed a new intraoperative, image-based guidance system to help surgeons more accurately visualize anatomical structures. The technology uses augmented reality to precisely register operative endoscopy views with preoperative CT or MRI images, allowing for real-time adjustment and fine-tuning based on the operator’s changing visual field.

Eduardo Corrales, MD, an otologist, neurotologist and skull base surgeon in the Division of Otolaryngology-Head and Neck Surgery, is leading the development in close collaboration with Jayender Jagadeesan, PhD, a research associate at the Brigham and assistant professor at Harvard Medical School.

Third Window Syndrome Symptoms Spurred Investigation

Dr. Corrales said he was prompted to develop the new approach after seeing patients with symptoms of third window syndrome in his clinic. Patients reported hearing an echo of their voice in their ear, dizziness when exposed to loud noises or changes in pressure and hearing their joints creaking or their eyes moving.

All of these symptoms were traced to an abnormal opening inside the inner ear called inner ear dehiscence. However, none of the patients had superior semicircular canal dehiscence, also called Minor’s syndrome, which is typically associated with the symptoms.

“The one thing these patients had in common was they all had previous skull base surgery that resulted in inner ear dehiscence,” Dr. Corrales said.

According to Dr. Corrales, complications such as inner ear dehiscence can happen when surgeons can’t adequately visualize complex anatomy in the skull base and drill too far, too deep or both. He said a surgeon’s intraoperative visualization is typically limited to the anatomical tissue surface exposed through optical imaging modality such as a microscope or endoscope, making it difficult to identify delicate and critical structures within the dense and opaque temporal bone.

“Intraoperative navigation is further hampered by two-dimensional static images that require substantial operator interaction and interpretation with distraction from the surgical field,” he said. “These systems often register with errors above one millimeter, which limits their application in otologic and other microsurgery of the skull base.”

To provide a more reliable intraoperative, image-based guidance system, Dr. Corrales and colleagues developed a novel technology that incorporates dynamic 3D images with depth and real-time adjustment techniques along with sub-millimeter registration accuracy.

From Algorithm to OR

To begin validating the technology, Dr. Corrales had previously created a novel surface reconstruction algorithm of the visible anatomy from intraoperative endoscopy images. Now, he and colleagues are using human cadaver models to apply a real-time dense surface reconstruction of the temporal bone. They are using stereo optical video applied to lateral skull base surgery, taking the process one step closer to use in clinical practice.

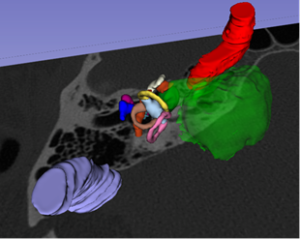

The validation involves placing preoperative fiducial markers on 12 temporal bones around the borders of a typical mastoidectomy. Intraoperative images are captured using a 3D endoscope and imported into 3D Slicer image processing software. Then, a 3D reconstructed model of the exposed tissue surface is generated using the novel surface reconstruction algorithm. Depth measurements on the 3D model can be compared to interoperative and postoperative CT measurements at multiple discrete steps of a mastoidectomy.

The surface reconstruction as well as preoperatively segmented temporal bone structures are projected on a monitor intraoperatively for the surgeon to assess position and proximity of these nearby structures. Registration errors are calculated at each interval of the mastoidectomy. Average mental, physical and temporal demand estimated from the NASA Task Load Index forms will be evaluated to estimate the demand on the physicians and the system’s ease of use.

“By allowing the surgeon to overlay digital data or images over their environment, they can better visualize temporal bones and identify structures,” Dr. Corrales said. “Patient-specific anatomy is visualized in 360 degrees, providing superior depth perception and keeping landmarks from disappearing. It’s a relatively inexpensive way to enhance the surgeon’s precision in relation to delicate structures.”

Application in Clinical Practice

After the technology is validated in cadaveric studies, Dr. Corrales plans to take it to the operating room for further validation before embarking on the FDA approval process. When and if the technology is approved for clinical practice, patients will no longer be at risk for the types of inner ear breaches that can occur with traditional surgical techniques.

“Thanks to the strong multidisciplinary collaboration among otolaryngology and radiology, the Brigham is poised to be the first to get this technology into the OR and into the hands of surgeons,” he said. “Once we have the technology in place, we can apply it to every single aspect of the skull base, allowing us to extend its use to routine otologic, lateral skull base and anterior skull base surgeries.”